Benchmarking Redis and PRedis

At work, I recently was tasked with looking into some NoSQL solutions for upcoming projects. For various reasons, I focused on the open source Redis project. Redis looks to be adding new features quickly and seemed to be a great potential solution.

I then started looking into PHP clients as our current environment is mostly PHP. We require that the client support consistent hashing, and, from a quick search, a couple turned up. PRedis seemed to offer the most potential, and after some quick tests, also seemed to offer the greatest performance. So I set up a more elaborate benchmark of the the client and server package.

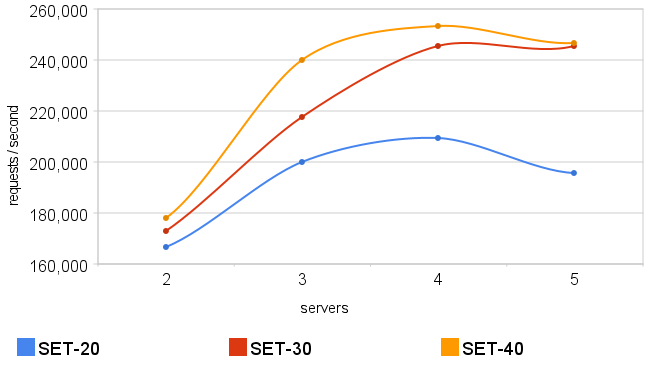

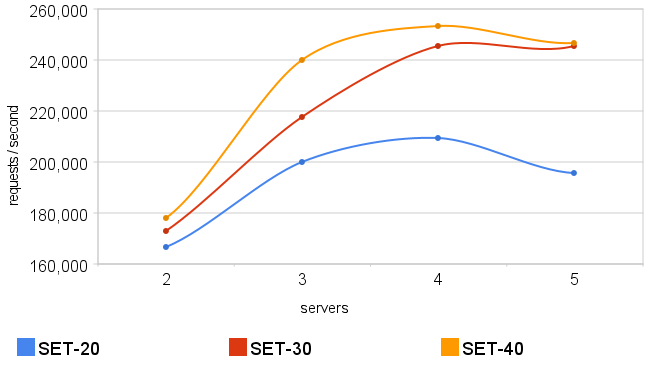

My test setup involved using 5 servers with between 2 and 5 enabled at a time on the clients (ie. I disabled up to 3 of the servers in the client configurations). For performance, I configured the servers to never write to disk, though periodically syncing to disk should not cause too much of a performance loss. In fact performance was most greatly affected by forcing an fsync after every write. I then had 9 other client boxes running the same code base, with all 9 enabled for each test.

Each client would start a master PHP process that forked 20, 30 or 40 child processes to simulate greater and greater load. Each forked PHP process then did 10,000 SETs on random keys with 4 byte payloads (early tests showed that payload size didn't drastically affect the results). I was using the PHP 4.2.6 branch of the PRedis client, and had optimized it a bit so that it did fewer counts of the consistent hash array. I made the optimizations based on some results after profiling the code. I then had the master PHP process on each box repeat the test 5 times to help to average the test results.

I used dsh to start the test simultaneously on all the clients, timing how long it took the dsh process to start and finish executing. This was the amount of time it took to execute (5 repeats) * (9 client boxes) * (20, 30 or 40 client processes / box) * (10,000 requests) = X total requests. I then graphed the results below.

[ ][]{class="caption"}

][]{class="caption"}

This ended up showing that with 9 clients, going up to 4 servers was the point of diminishing returns. Adding the fifth server (with that client box count) did not increase throughput, but rather, the throughput went down. The reason for this is most likely a combination of network interface contention on each client and higher overhead from consistent hashing. So with 9 clients, I found that the sweet spot, with my setup, was 4 servers. By adding more clients, along with more servers, the throughput would increase, instead of the decrease I was starting to see.

[ ]: /static/images/2010/01/redis.png

]: /static/images/2010/01/redis.png